In all cases where AI reached superhuman abilities, human experience became obsolete. AlphaZero (DeepMind): mastered chess playing against itself, millions of games, superhuman level in hours!

Current LLM training uses human responses to create reward models. But why need humans if the goal is to uplift LLM linguistic abilities to superhuman levels? 🤔

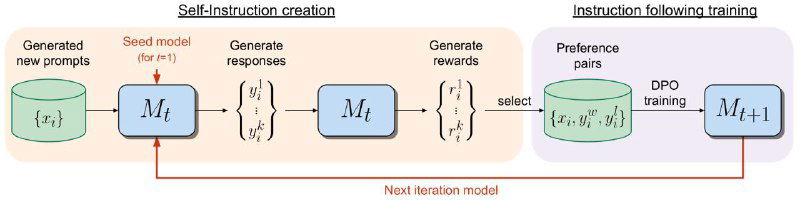

Solution? Self-sustaining LLMs creating their own reward models which itself “are used via LLM-as-a-Judge prompting to provide its own rewards during training”. Tested on Llama 2 70B, this method outperformed most systems, including Claude 2, Gemini Pro, GPT-4 (report)

What's cool? This brings the speculations around "people are not needed for LLM self-improvement" into practical reality [for the first time, I think]

So, one day Placy will invite Placy Pro to a psychology course, and she, in turn, will invite her sister to sales training 😀